IRAM: The last ounce of RAM on an ESP32

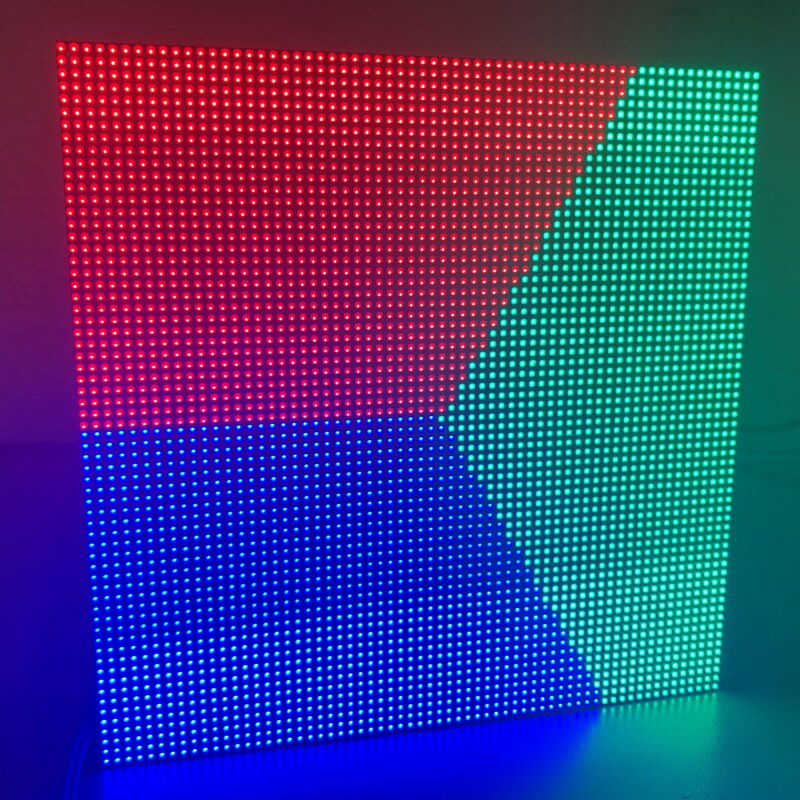

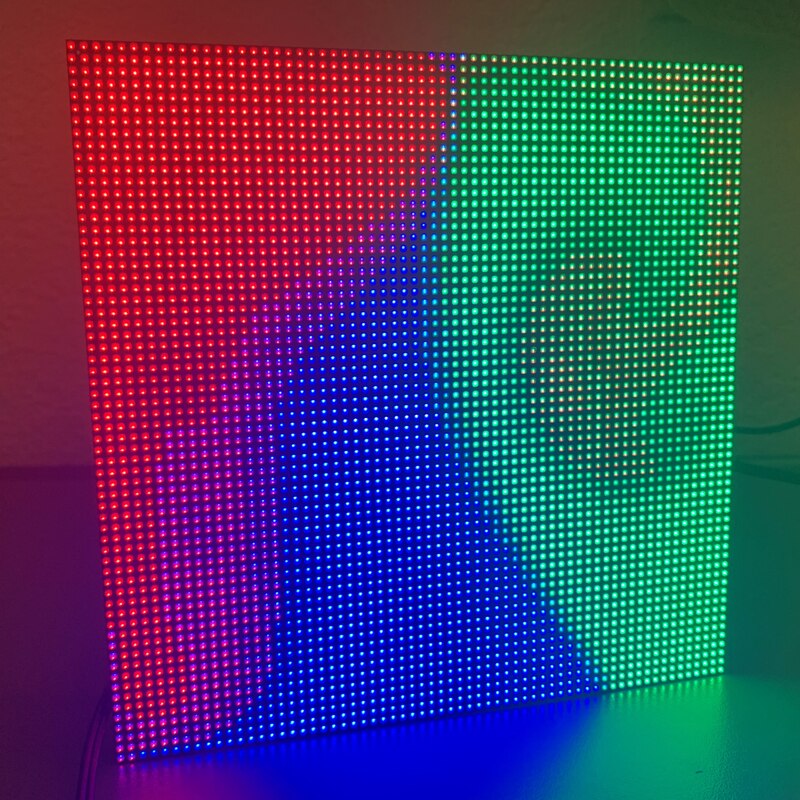

One of my summer projects was clearly about to go over-time, but I also realized that I needed some kind of platform running in order to check my progress so far. So, once again, I tried to cram a large algorithm into an ESP32. This time, it was a basic fluid simulation, described in Real-Time Fluid Dynamics for Games by Jos Stam.

Just barely, I managed to get the ESP32 to do it, and you can see the details and code here. After playing with and getting stuck on the concepts for months, putting that code together was simple. However, getting that code to run was a different story. In the end, I pretty much needed every ounce of RAM the ESP32 had, and the last ounce, the IRAM, was a real challenge to get.

I had been browsing the esp-idf documentation and I came across the entry about IRAM. Although the IRAM (Instruction RAM) was not intended for data, it could be allocated with the call heap_caps_malloc(size, MALLOC_CAP_32BIT) as long as the data elements were 32 bits wide. floats are that wide, I thought, so why can’t I use that? When I tried though, I got a “LoadStoreError”.

The reason why turned out to be pretty odd: only 32-bit integers could be stored in IRAM. In this Github issue, I read about how floats couldn’t naturally be stored in IRAM because the compiler didn’t rightly use the l32i and s32i instructions to do so. At the time, I was about to accept this, being just about to use Q31 instead, but then I realized that the bits of a float just had to be reinterpreted as an integer. It didn’t matter what the integer meant in integer form.

Once I realized this, I tried to write a class that would be treated like a float but would only be stored as a uint32_t. From there, I used reinterpret_cast to force the bits to be reinterpreted. Still, I was getting the “LoadStoreError”. At this point, I wondered if the extra steps I was taking here were just being optimized away. Just to make sure, I made the uint32_t member a volatile uint32_t member, since I knew that optimizations involving volatile data would be blocked. This did happen to require that I copy the volatile data into a temporary non-volatile location before I could use it, but this all suddenly worked!

// iram_float_t

// A float that must be stored in IRAM as an integer. The result is forcing the compiler

// to use the l32i/s32i instructions (using other instructions causes a LoadStoreError).

// Original issue (and assembly solution): https://github.com/espressif/esp-idf/issues/3036

// This code is released into the public domain or under CC0

class iram_float_t{

public:

iram_float_t(float value = 0)

: _value(*reinterpret_cast<volatile unsigned int*>(&value)) {}

void* operator new[] (size_t size){ // Allows allocation from IRAM

return heap_caps_malloc(size, MALLOC_CAP_32BIT);

}

operator float() const {

uint32_t a_raw = _value;

return *reinterpret_cast<float*>(&a_raw);

}

private:

volatile unsigned int _value;

};

This was the final version of that class I ended up with. The new[] operator is overloaded with an allocation in the IRAM, and the other methods have been defined such that conversions in and out of float are implicit. Since the issue was assembly-level, this probably could have been solved with an assembly solution, but this high-level solution was extremely simple to implement and use. In many cases, it should only take a couple substitutions to use this class, and it also leaves many things up to the compiler for optimization purposes. The key side-effect, though, is that every access of volatile memory requires an extra instruction to deal with the temporary register. Overall, I’ve observed about a 30% performance loss even though I didn’t depend too heavily on IRAM.

Still, it was the last ounce of RAM I needed, and if anyone else needs to store floats in IRAM, I hope this workaround helps.